In this blog post I'll share some of my experiences with folktologies. Unfortunately I don't have an updated scientific study for the fuzzzy.com's folktology so you'll have to do with my personal observations. To learn more about fuzzzy.com and the folktology read my paper Metadata Creation in Socio-semantic Tagging Systems: Towards Holistic Knowledge Creation and Interchange.

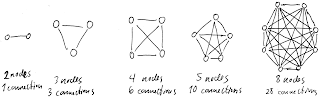

Folksonomies, as we have come to know them on many social media sites, let users create any tag. This tag becomes available to everyone else. An Ontology on the other hand is a set of concepts within a domain, and the relationships between those concepts. Ontologies are the backbone of the Semantic Web so Fuzzzy was a project to see if the imprecise folksonomies could be replaced with more semantic folktologies. Some of the problems with folksonomies are synonyms, ambiguous tags, overly personalised and inexact, homonyms, plural and singular forms, conjugated words and compound words etc [1]. While folksonomies work just fine for a blog, it does not scale as well if semantics is important. As the folksonomy grows it grows into a large flat list of tags where a large percentage of tags just don't make any sense. To fix this problem they usually just show the tags that are used most frequently.

How the folktology of fuzzzy.com was used

A folktology seemed to be a smart use of Topic Maps. The folktology was seen as a way to make tagging more semantic but here's what happened:

- Few users created relations between tags.

- Few users assigned subject identifiers (needed to tell tags apart or if they represented the same thing)

- Few users voted on relations between tags.

- Few users voted on relations between tags and bookmarks.

Users did however use tags as if they were folksonomies. Users created both tags and bookmarks and added tags to bookmarks. Tags did have better quality [3] as users could edit tags and make them more consistent. Tags where more intuitive and made more sense when used on bookmarks. Common folksonomy problems such as synonyms, ambiguity, overly personalised, homonyms, plural and singular form tags etc were to a large degree weeded out.

- People did already have a fixed view on how tagging should work and did not care to learn about the other tag features.

- People are in a hurry when tagging. They bookmark because thy don't have time to read the webpage. So its very important to be able to add bookmarks quickly and to assign tags just as quick.

- Bookmarking is personal even if you use a social service. Every user has their own reasons for saving those particular bookmarks so he or she has no incentives to create an pretty shared ontology/tag network.

- The rewards for creating tag to tag relations are perceived as low. Users don't see a direct value. With a built out folktology or semantic network, users can have more relevant bookmarks suggested to them. This is obviously a nice to have feature but it does not justify the work that goes into managing the folktology.

- The advanced tagging features becomes overkill and a source for cognitive overload for regular web users.

Problems with a folktology

The social and semantic bookmarking service fuzzzy.com has a shared semantic tag set. This introduces several issues in comparison to plain and simple folksonomies. I summarize these issues as context and authority.

- Who gets to update the individual tags? A tag is shared and reused by all. If a person decides to create a tag, say “vintage furniture” and another person want to use the same tag but would rather have a different description for the tag, or maybe he thinks the tag should have a different name all together. (Language and vocabularies naturally evolve over time). Then what? Who gets to decide? The meaning of a tag all depends on the context of the person. His situation and background will decide what is meaningful to him. No two persons has the exact same context.

- For tags to be semantically interoperable it needs to have an addressable identifier (a PSI in Topic Maps jargon) and a particular semiotic meaning attached to the tag. An authority or other entity must make sure the Id and the meaning of the tags are fairly stable. In an open web environment this is not trivial.

- One persons definition of a term might be slightly different from the other person because they have different backgrounds. It would not be right to force them to use a definition that does not reflect how they see the world.

- What if a tag name is to be changed because language is changing. If it is changed other users might not find what they are looking for. In this case one might present old tag names marked as deprecated etc but it still enforces a new world view onto users. In some cases (maybe on a corporate Intranet) this might be a good thing.

- Who will garden and have the last say? What if a user want to delete a semantic tag he has created but this tag have been adopted and used by many other users?

Conclusion

An ontology is a generic, commonly agreed upon specification of a conceptualization of a domain [2]. This definition is not compatible with open online social web 2.0 environments where people do not have a shared understanding. This and the above mentioned issues suggest that a folktology such as on fuzzzy.com is best suited for personal use or for coherent teams that have large amount of bookmarks.

To fix these folktology issues a solution could be to have personal topic maps which overlap with other users topics maps. For this to work with some degree of semanticity you could have subject identifiers for each node or deduce node similarity from looking at naming and nodes close by.

References